In the early days of cloud computing, a common narrative among providers like Amazon and Microsoft was that idle time was the primary driver of their offerings. The servers in their ever-growing data centers spent most of their time idle, said they, so what better way to maximize compute time than by leasing those spare cycles to developers and businesses, many of whom couldn’t not afford their own iron?

The rest, we know, is history. This business decision transformed the economics of starting countless new software businesses, while rendering on-premise server rooms a corporate oddity of empty spaces with raised subfloors and zip-tied bundles of unused patch cable.

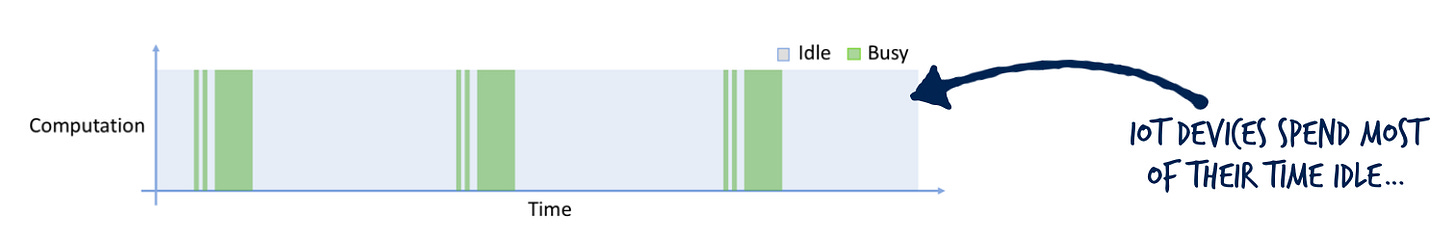

What does this have to do with the IoT, you might ask? As it turns out, much like the pre-cloud infrastructure of old, many of of our IoT devices spend much of their time idle. Some of those devices are in low-power sleep states, but many are not.

I’m not the first to make this observation, and do not claim it as an original thought, but I do find it quite compelling. In an era when even inexpensive Arm Cortex-based devices are faster and more capable (several times over) than your first Pentium computer, what is one to do with all that spare compute among a fleet of tiny IoT devices?

There are a number of things that come to mind, but ML inferencing feels, to me, like the most compelling of all. Models are merely large collections of weights and biases to be run against unseen data. As quantization becomes more commonplace and accessible in frameworks like TensorFlow Lite, perhaps we’ll find that the next rent economy lives not in the cloud, but within the Internet of Things?

Hyperbolic? Maybe. But then again, who ever thought, way back in 1999, that we’d all end up leasing server space from our favorite bookstore?

ML & IoT News and Observations

I’m a big fan of the Google Coral products, and have built and presented a few demos with the Dev Board. So has Zack Akil. He gave a fantastic talk at PyData London that you can watch here. Zack built a scale-model of a bike to demonstrate using the Coral USB compute stick and a Raspberry Pi 4 for real-time vehicle detection—a sort-of LED-powered rear-view safety camera. It’s a compelling talk, and Zach does an especially good job explaining quantization, a key and essential ingredient of the edge/IoT inferencing workflow.

The TensorFlow lite team has released a Model Optimization Toolkit and set of guides. While not exclusively for use in edge/IoT scenarios, edge inferencing is a major use case. I’ve not been able to try this toolkit out on my own projects yet, but plan to soon.

If you’re in the San Francisco Bay Area, there’s a tinyML meetup down in San Jose, hosted by Qualcomm. I believe the plan is to meet the last Thursday of each month. I’m planning to attend the next time I’m in the area (September) so please consider joining me if you can!

The tinyML group has also announced the second tinyML summit, planned for February of 2020. Stay tuned to that link for updates, and perhaps we’ll see each other there, as well.